DARPA helped create a shocking light, because it is obvious

Between coated eyes, rubbed shoulders, polished hands and hard vocals, it’s not hard to say when someone is rude when giving you a face-to-face business. Online, however, you need SpongeBob’s meme is coming and freely use the exchange key to hear conflicting information. Luckily for us netizens, DARPA’s Information Innovation Office (I2O) has teamed up with researchers from the University of Central Florida to develop a deep-rooted AI that can understand the ins and outs of written accuracy with astonishing accuracy.

“Because of the rapid and growing interest in social media, companies rely on data analytics tools and customer support. These tools serve as refinement, analytics, and the delivery of relevant information representing corporate clients to respond to,” UCF Associate Professor of Industrial Engineering and Management Systems, a Dr. Ivan Garibay, told Engadget via email. “However, these tools lack the expertise to understand vague language such as distractions or jokes, in which the meaning of the message is not always clear and clear. This allows the TV community, which is already full of customer messages to recognize the messages and respond appropriately.”

As reported in a study published in the newspaper, Entropy, Garibay and UCF PhD student Ramya Akula have developed an “in-depth translation approach to teaching using self-care of many topics and repetitive gated units. An independent self-help method to identify key words of the distorted word from the source, and recent units learn the long-distance dependence between the words in order to divide the word. ”

“Basically, the researcher’s approach seeks to determine how the characters reflect,” said Drs. Brian Kettler, program manager at I2O who oversees SocialSim app, he explained in a recent press release. “It defines descriptive words and their relationship to other words that represent derogatory remarks or statements.”

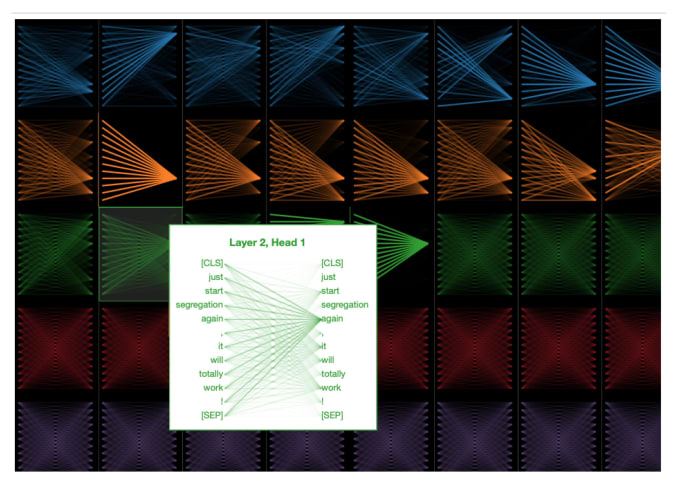

The ways in which the group differs from methods used inside previous experiments use the machine to see Blasphemy on Twitter. “The old way to approach it is to sit down and explain what we’re going to see,” Kettler told Engadget, “perhaps, linguistic ideas about what makes language ridiculous” or put up posters based on what might be thought of, such as Amazon’s random review of certain items or all other forms. The nation also learned to obey specific words and pronouns such as justice, again, completely, and “!” one time he recognized them. “These are words that are in a sentence that speaks in a derogatory manner and, as might be expected, these are more widely accepted than others,” the researchers wrote.

Complex Adaptive Systems Lab, University of Central Florida

In this work, the researchers used a variety of web groups from Twitter, Reddit, The Onions, Download and Sarcasm Corpus V2 Interviews from Internet Controversy. “This is the beauty of the process, what you need is to teach the models,” Kettler said. “Enough of that, and the machines have studied the content that clearly shows that the language is degrading.”

The genre also provides an overview of its performance in decision-making that is not evident in in-depth study of such AI models. Confusion AI shows users what languages they are learning and thinks they are essential in a given context through the visual experience (below)

Complex Adaptive Systems Lab, University of Central Florida

The most amazing thing is the accuracy of the system. On the Twitter dataset, the nation did not mention the F1 score of 98.7 (8.7 is higher than its best friend) while, on the Reddit dataset, it scored 81.0 – 4 points above the competition. On the main points, it recorded 91.8, more than five points ahead of the same format, although it seems to be a bit more aggressive with Dialogues (just hitting F1 of 77.2).

Once the brand is developed, it can be a valuable asset to government and federal agencies. Kettler sees this AI in line with the larger purpose of the SocialSim program. “It’s a small thing that we do very well, which is to focus on and understand online information,” he said, trying to recognize “greater commitment”. [and] how many people have taken part to learn more about this genre. ”

For example, if the NIH or the CDC conducts a public health campaign and asks for answers online, administrators will have the opportunity to publicly present all ideas about the campaign after fraudulently responding to trolls and shitposters.

“We want to understand these ideas,” he continued. “Where people do, people like something or don’t like something, and teasing can fool the mind into thinking … It’s a necessary skill and it allows the machine to better interpret what we see online.”

The UCF team is planning to develop the nation to use languages other than English before opening the number. However, Garibay points out that one thing that can be constrained is their ability to produce “high-tech weapons in a number of languages. Then the next big problem will be to deal with mysteries, mass communication, rhetoric, and dealing with linguistic evolution.”

All the products that Engadget selected were selected by our management team, independent of our parent company. Some of our articles include helpful links. If you purchase one of these links, we will be able to make a donation.

Source link