EU law may regulate the practice of robot doctors but not the military

As U.S. lawmakers again disrupt another meeting in the dangers that come with bias In the case of media, the European Commission (especially the EU’s regulatory body) has identified regulatory mechanisms that, if approved, could have a global impact on AI development.

This is not the Commission’s first attempt to regulate the growth and development of this technology. After extensive meetings with security forces and other stakeholders, the EC released the first of its kind European approaches to AI and Integrated Plans for AI in 2018. Those followed in 2019 are Reliable AI Instructions, then in 2020 by the Commission’s White Paper on AI and Talk about security issues and problems with Artificial Intelligence, Internet of Things and robots. As required by the General Data Protection Regulation (GDPR) in 2018, the Commission strives to establish a reliable foundation for the technical community based on prudent and confidential use and confidential information.

OLIVIER HOSLET via Getty Photos

”Design should not be the end in itself, but a tool that should help people with the ultimate goal of improving people’s lives. The wise production laws that are available in the Union market or that may affect the citizens of the Union must put people in the middle (to be patriotic), so that they can believe that professionalism is used in a safe and legal manner, including respect for fundamental rights, “the Commission added. “At the same time, such laws should be balanced, equitable and non-compulsory or impediment to development. This is especially important because, although creative thinking already exists in many areas of daily life, it is impossible to imagine any future application. . ”

Indeed, smart devices are ubiquitous in our lives – from helpful information that helps us decide what to watch on Netflix and what to follow on Twitter to digital assistants in our phones and drivers who watch us on the road (or do not) when driving.

“The European Commission has come out boldly to implement the forthcoming technology, just as it did in the privacy of the GDPR,” Brandie Nonnecke, Director of CITRIS Policy Lab at UC Berkeley, told Engadget. “The law is very much in the interest of the people because it is addressing the problem through the use of dangerous methods,” similar to the one used. AI Canadian management strategies.

The new law divides the EU’s EU trials into four categories – low risk, low risk, high risk, and total ban – based on potential risks. “The behaviors that live within them are a threat to humanity, while every time you hear of a threat [in the US], which is very dangerous based on, ‘What is my role, what do I show it?’ ” Jennifer King, Director of Privacy and Data at the Stanford University Institute for Human-Centered Artificial Intelligence, told Engadget. “And in some ways if this includes human rights as a threat, it is re-enacted but to the extent that it can be external, it is not included.”

The use of technical expertise includes any activities that improve human behavior to protect the rights of users – especially those who suppress a particular group’s vulnerability due to their age, physical or mental disability – as well as ‘real-systems’ biometric identification by those who allow ‘businesses should be’ with governments, according to Request for pages 108. This is a direct hit in China Good Public Organization and since the legislation would still regulate the expertise that affects EU citizens whether or not these people were on the EU border or not, it could soon lead to exciting international events. “There’s a lot of work to be done to move forward following guidelines,” King said.

Jochen Eckel / reuters

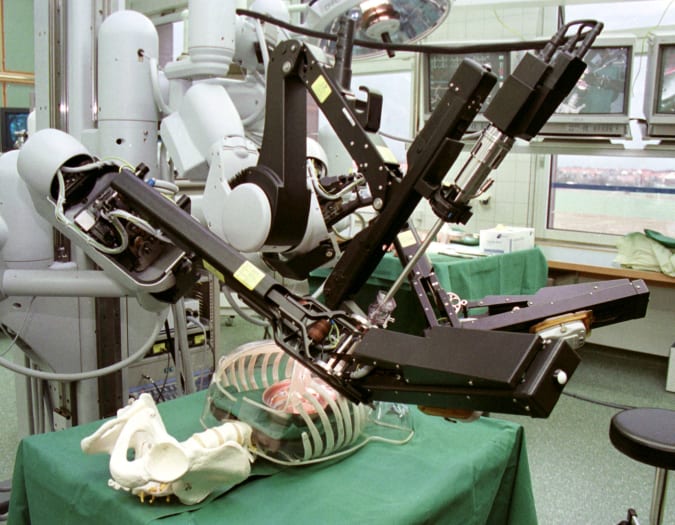

Extremely risky jobs, it is known to be any of the things that AI is “used as a security in sales” or AI is the security component in itself (imagine, the collision-preventing part of your car. legal / judicial and recruitment are considered to be at high risk.This may come on the market but follows strict marketing prior to sale such as the need for an AI manufacturer to comply with EU regulations throughout the transaction, ensure privacy, and maintain personal control. does not mean that robosurgeons will be independent in the future.

“I’ve read from Europeans who seem to be thinking about supervision – I don’t know if it’s too much to say from infancy to the grave,” King said. “But there seems to be a sense in which there is a need for further monitoring, especially mixed systems.” One of these oversight is the EU’s commitment to AI control boxes that can help developers design and test systems that are globally globally but have no real global impact.

These sandboxes, in which all NGOs – not just big enough to have independent R&D funds – have the right to build their own AI machines under the supervision of EC regulators, “the aim being to prevent the problems posed by the GDPR , which resulted in a 17% increase The market for him once launched, “Jason Pilkington protested recently The Truth In The Market. “But it is not clear whether he will achieve this goal.” The EU is also planning to establish a European Artificial Intelligence Board to oversee follow-up activities.

Nonnecke also said that many areas where the rules are at risk are those where academic researchers and journalists have been reviewing for years. “I think this underscores the importance of interesting research and investigative journalism so that lawmakers can better understand the dangers of these AI systems and the benefits of these systems,” he said. One area where these rules cannot work is with AIs built specifically for military use thus bringing murder!

Ben Birchall – PA Photos via Getty Photos

Less risky activities include things like chatbots on a support website or with deep content. In this case, the AI developer simply needs to notify future users to connect to the machine and not to another person or even a dog. And with a few risk factors, such as AI in video games and the amount of work the EC expects to see, the rules do not require any restrictions or other requirements that must be met before going to market.

And if any company or programmer tries to ignore these regs, they have found that good governance comes with a huge fine – which can be calculated in terms of GDP. In particular, the penalty for not doing this could be up to 30 million euros or 4% of the global cost, whichever is greater.

“It is important for us in Europe to send a strong message and set standards based on how these technologies should be allowed to continue,” Dragos Tudorache, a member of the European Parliament and head of the technical committee, told Dragos Tudorache. Bloomberg in a recent interview. “Establishing laws to regulate them is important and it is important for the European Commission to take this into account.”

Whether the rest of the world will follow Brussell’s lead in this regard is unknown. As these laws define what AI is – and as such – we can expect to see the law affect almost everything in the global market and in all economic sectors around the world, not in the digital sector. It is clear that these laws will pass through strict (often disputed) laws that will take years to complete before they can be enacted.

As for the American opportunity to enact the same laws, well. “I think we’ll see something asked of the federation, yeah,” Nonnecke said. “Do I think it’ll be granted? These are two different things. ”

All sales selected by Engadget are selected by our publishing team, independent of our parent company. Some of our articles include helpful links. If you purchase one of these links, we will be able to make a donation.

Source link